Connecting to Tasks

When Calico is used to network Mesos tasks, each task is assigned a uniquely addressable IP address.

Since these IP addresses are dynamically assigned and may change when a task is re-deployed, it is recommended to use one of the available service discovery solutions available for Mesos.

Mesos-DNS

Important: Modifying Mesos-DNS in DC/OS may break components which rely on Mesos-DNS resolving to Agent IPs. It is instead recommended to use Navstar DNS entries. Skip ahead for information on how to use this alternative DNS service.

Mesos-DNS is an open source DNS service for Mesos which gathers task information from the Mesos Master and uses it to serve DNS entries which resolve to a task’s IP. Mesos-DNS provides the following DNS entry for tasks launched by Marathon:

<task-name>.marathon.mesos

By default, Mesos-DNS is configured to return the IP address of the Agent that is running the task.

When using Calico, it needs to be reconfigured to return the tasks’ Container IP instead.

To do this, modify its configuration to preferentially resolve to a task’s

netinfo for the IPSources field, as follows:

{

"IPSources": ["netinfo", "host"]

}

For example, a Calico-networked task named nginx scaled to 3 instances will

respond to DNS lookups with the Calico IPs:

[vagrant@calico-01 ~]$ nslookup nginx.marathon.mesos

Server: 172.24.197.101

Address: 172.24.197.101#53

Name: nginx.marathon.mesos

Address: 192.168.84.192

Name: nginx.marathon.mesos

Address: 192.168.50.65

Name: nginx.marathon.mesos

Address: 192.168.50.66

Navstar / Spartan DNS

DC/OS 1.8+ includes Navstar & Spartan DNS services. These DNS services provide additional DNS entries which always resolve to a task’s Container IP.

The following address will resolve to a task’s Calico IP:

<task-name>.marathon.containerip.dcos.thisdcos.directory

Load Balancing / External Service Discovery

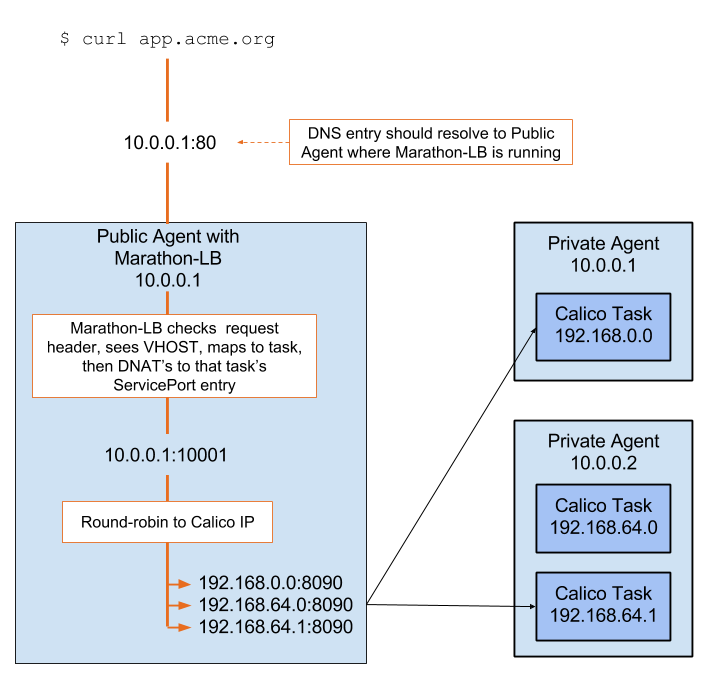

For accessing Calico tasks from outside the cluster, Marathon-LB can be used to forward requests on to Calico tasks. The following diagram demonstrates how this works:

As per the official marathon-lb docs, select a HAPROXY_GROUP and

optionally choose a HAPROXY_VHOST for your application in its Marathon application definition.

Additionally, for Calico, you’ll need to explicitly tell it which port to load balance to.

Set containerPort in your application’s portMappings:

{

"id": "nginx",

"instances": 3,

"labels": {

"HAPROXY_GROUP":"external",

"HAPROXY_0_VHOST":"mytask.acme.org"

},

"container": {

"type": "DOCKER",

"docker": {

"image": "nginx",

"network": "USER",

"portMappings": [{"containerPort": 80}]

}

},

"ipAddress": {

"networkName": "calico"

}

}

Requests made to the VHOST URL (from a host which resolves the address to the public slave) will be fulfilled by the Calico task backing it. We can simulate this resolution with a simple curl:

curl -H "Host: mytask.acme.org" 172.24.197.101

Note: Replace 172.24.197.101 with the IP of the public slave where Marathon-LB is running.

A look into the haproxy.cfg generated by Marathon-LB shows that

requests to this VIP will be round robin’d to the Calico IPs assigned

to our nginx instances:

docker exec marathon-lb cat haproxy.cfg

...

backend nginx_10000

balance roundrobin

mode http

option forwardfor

http-request set-header X-Forwarded-Port %[dst_port]

http-request add-header X-Forwarded-Proto https if { ssl_fc }

server 172_24_197_101_192_168_84_196_80 192.168.84.196:80

server 172_24_197_101_192_168_84_197_80 192.168.84.197:80

server 172_24_197_102_192_168_50_70_80 192.168.50.70:80